Frequently Asked Questions About Embeddings

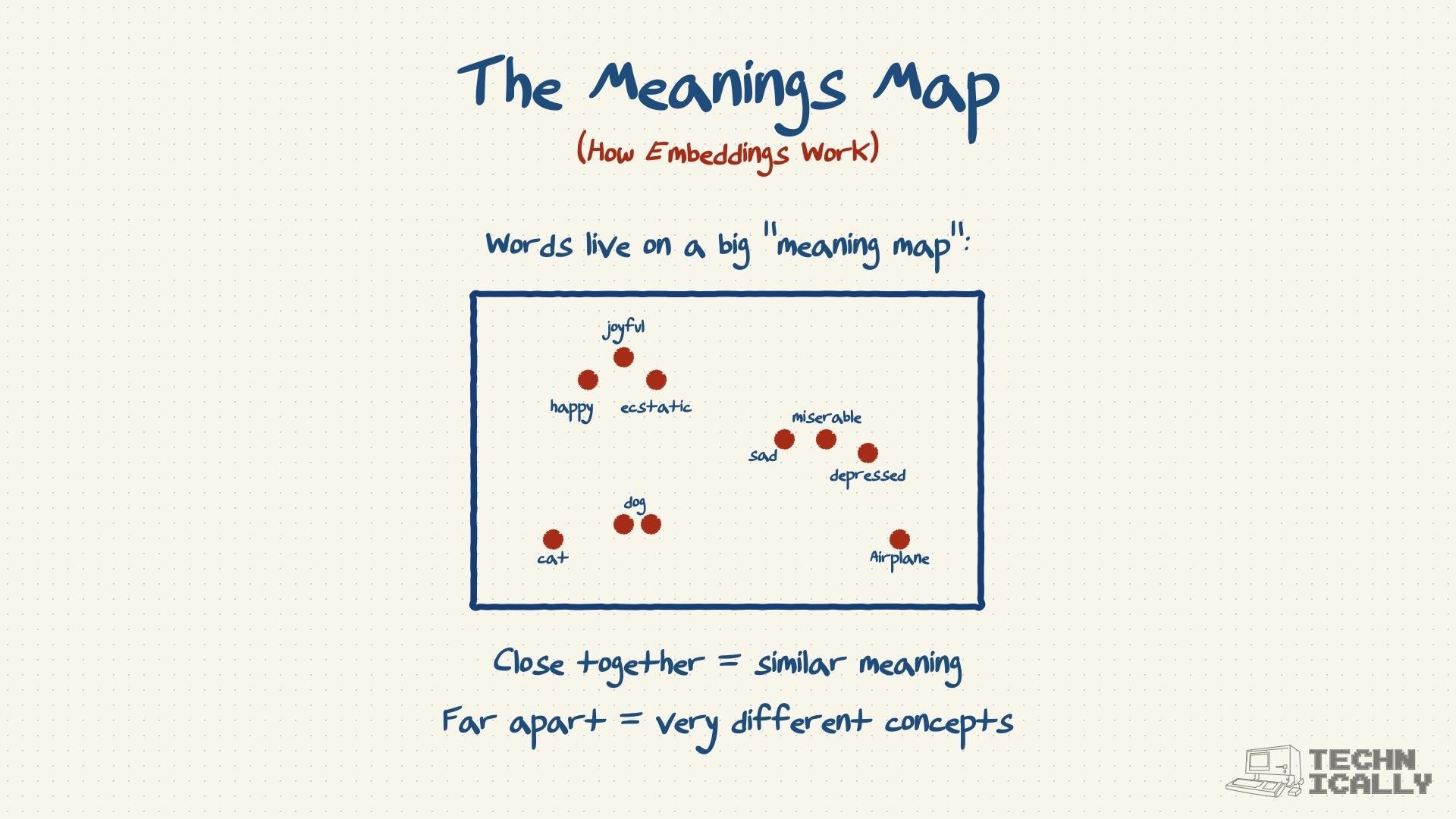

Can you visualize embeddings?

Sort of, but it’s like trying to draw a map of the entire universe on a post-it note. Researchers use techniques (like t-SNE) to squash these 1,000-dimensional concepts down into 2D or 3D charts. You can see cool clusters — like all the fruits hanging out together or all the chefs in one corner — but you lose a ton of nuance in the process.

How big are embeddings?

It depends on how smart you want your model to be. Older models might use 100-300 dimensions (numbers) per word. The massive brains behind GPT-5 are likely using tens of thousands of dimensions. More dimensions = more understanding, but it also requires a hell of a lot more computing power to process.

Who invented embeddings?

The concept has been around in academia for decades, but Word2Vec (released by Google in 2013) was the "iPhone moment" for embeddings. It proved you could capture surprisingly deep relationships between words using relatively simple math.

Do embeddings work in all languages?

Yes! And this is one of the best features. You can create embeddings for Spanish, Japanese, or Swahili. Even cooler? In multilingual models, the concept of "Cat" in English and "Gato" in Spanish end up in the same neighborhood. The math creates a universal bridge between languages.

What happens when you add or subtract embeddings?

This is the party trick of the AI world. You can literally do math with concepts.

- King - Man + Woman ≈ Queen

- Paris - France + Italy ≈ Rome

It doesn't work perfectly every time, but the fact that you can do arithmetic with meaning is pretty mind-blowing.