Other FAQs about neural networks

How many nodes does a neural network have?

Neural networks can range from dozens of millions of nodes (and parameters) to billions or even trillions of parameters, depending on the model architecture and purpose. With GPT-5, while OpenAI has not publicly disclosed an exact parameter count, most independent estimates place it in the low-trillion range or higher. Some analyst write-ups suggest around 1.7–1.8 trillion parameters for a dense-style version, while others argue that if a “mixture-of-experts” (MoE) architecture is used, the total across all experts could reach tens of trillions.

So instead of “about 175 billion connections,” you’re looking at potentially thousands of billions of connections—trillions—for GPT-5. That’s far beyond the star count of the Milky Way, but the exact figure remains a guarded secret. Despite the scale, engineers still manage to run it on server farms, and yes, it can still write you a poem about your cat.

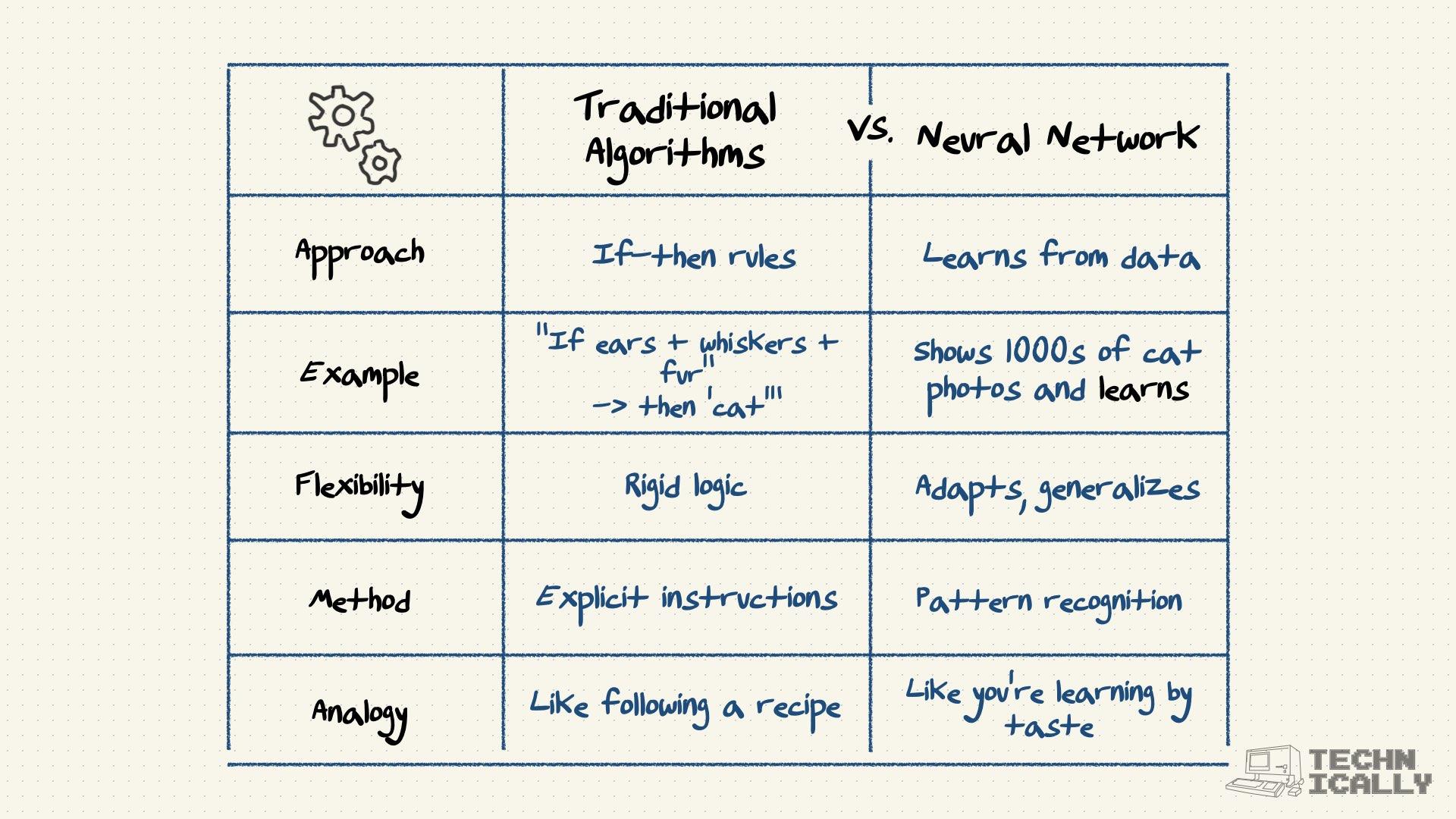

Are neural networks actually like the human brain?

Not really, despite what the marketing materials might suggest. They're inspired by how brain cells connect, but real neurons are vastly more complex and mysterious. Think of neural networks as a very simplified cartoon version of brain function - useful for getting computers to recognize patterns, but about as similar to a real brain as a paper airplane is to a Boeing 747.

Do neural networks think?

This is where things get philosophical real quick. Neural networks are really good at pattern matching and can produce outputs that seem intelligent, but whether that constitutes "thinking" is the kind of question that keeps philosophers and AI researchers up at night. What we can say is they process information in ways that can be surprisingly human-like, even if the underlying process is just math.

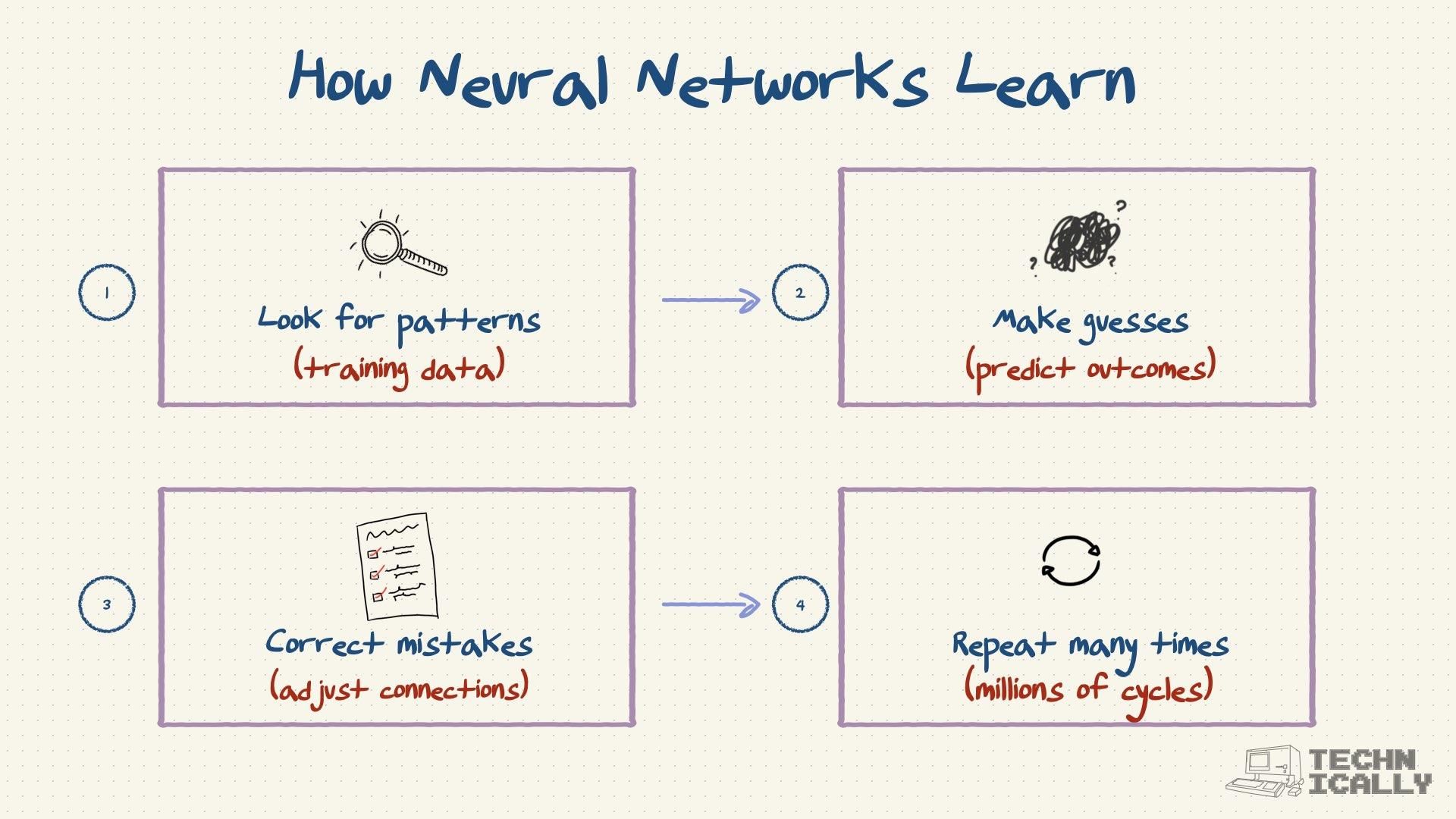

How long does it take to train a neural network?

Anywhere from your lunch break to several months, depending on what you're building. Training ChatGPT took weeks on thousands of powerful computers and cost millions of dollars. A simple network for recognizing handwritten digits might train in an hour on your laptop. The general rule: the more impressive the AI, the more expensive and time-consuming it was to create.

Can neural networks make mistakes?

Oh absolutely, and sometimes in spectacular ways. They might confidently identify a chihuahua as a muffin, or translate "hydraulic ram" as "water sheep" (true story). Neural networks are only as good as their training data, and they can be fooled by adversarial examples or situations they've never encountered before.

What happens when neural networks get bigger?

Generally, they get better at their tasks, but with diminishing returns and exponentially increasing costs. There's also an interesting phenomenon called "emergence" where larger networks suddenly develop capabilities that smaller ones don't have — like the ability to reason through multi-step problems or understand context in surprisingly sophisticated ways.