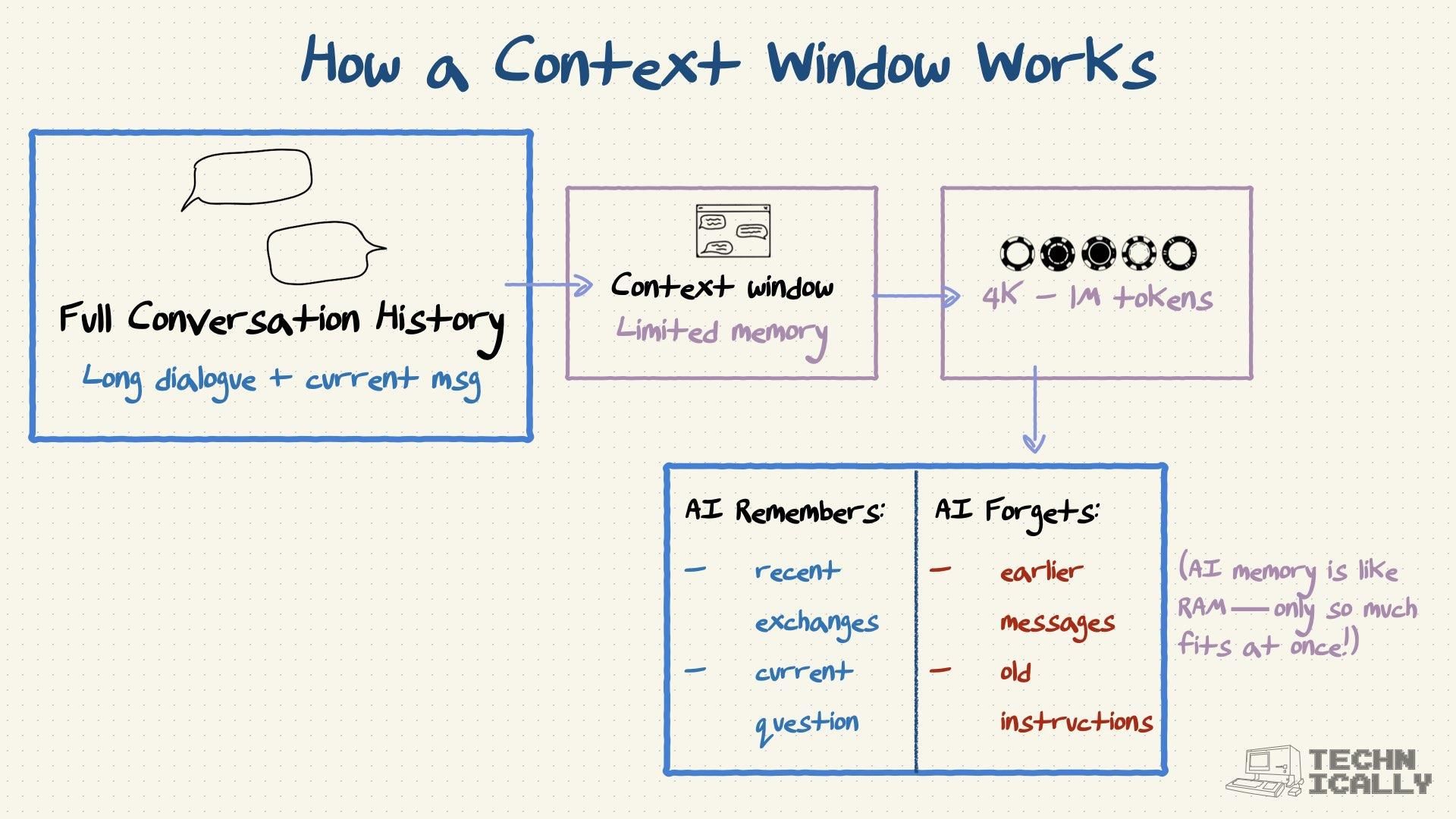

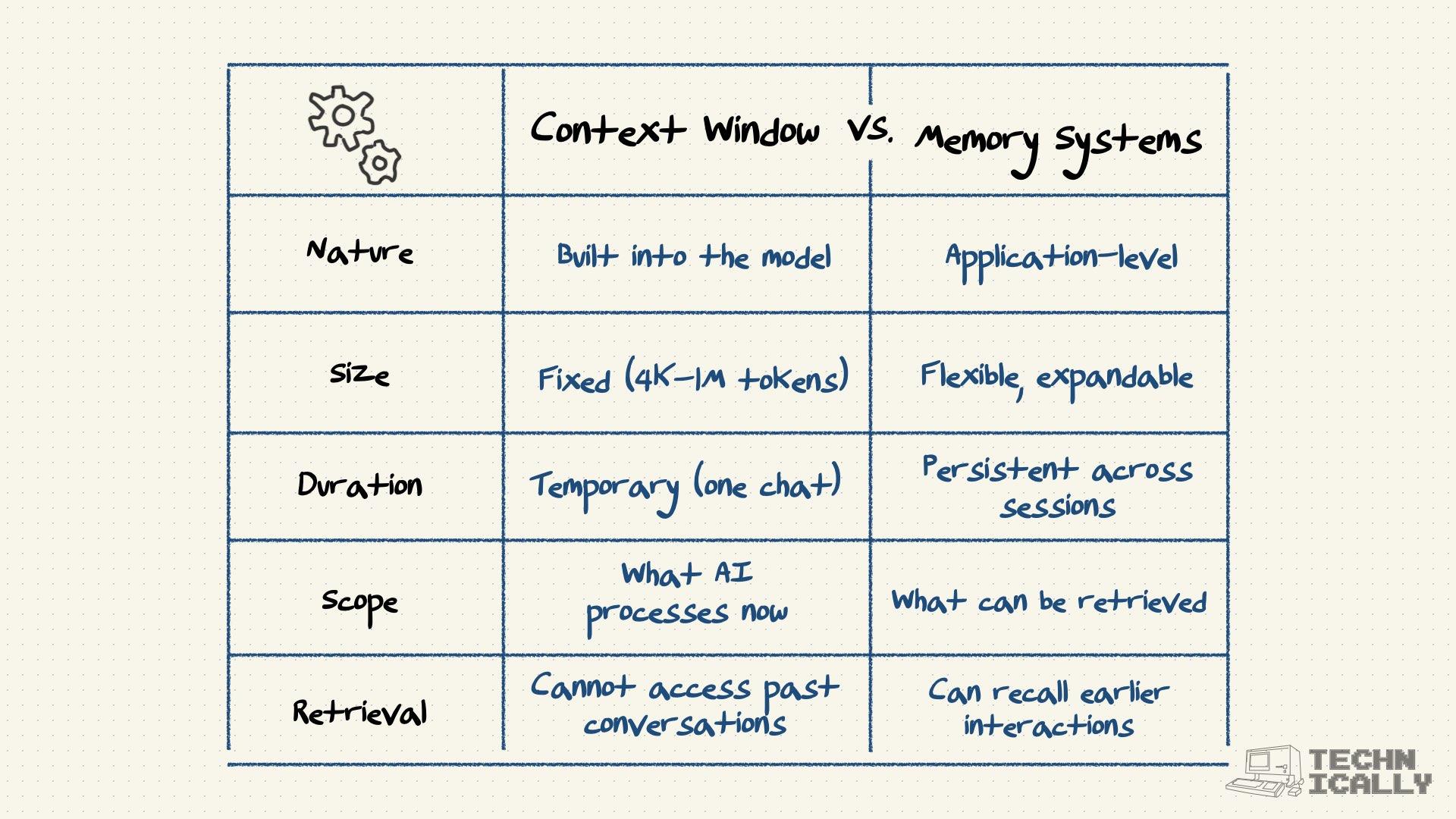

A context window is how much data an AI model can hold in memory at once.

- It determines how much conversation history and information the AI can consider when responding

- Context windows are measured in tokens, which roughly correspond to words and parts of words

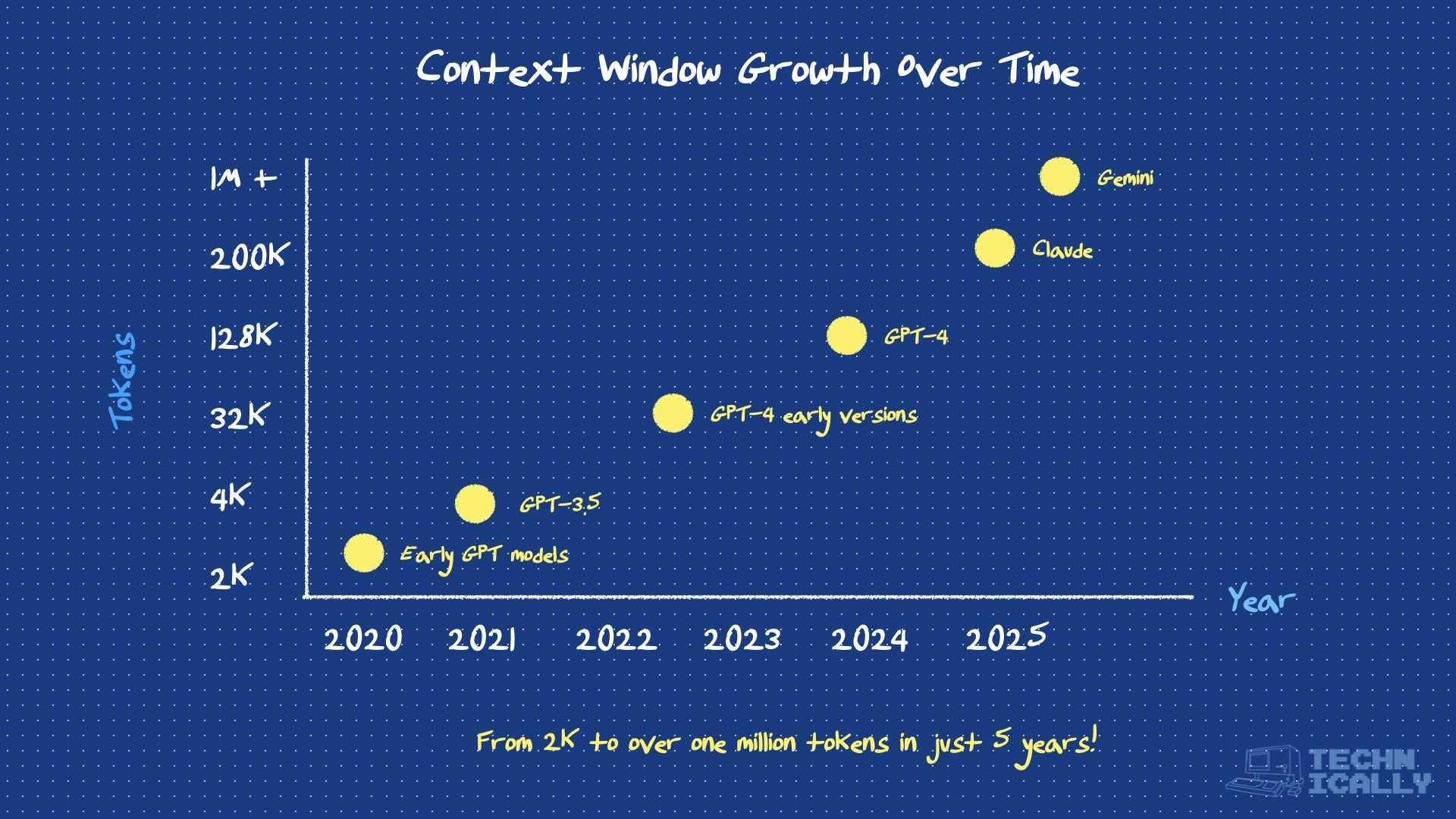

- Different models have different context window sizes—from thousands to millions of tokens (and you can pay extra for more)

- Think of it like working memory for AI—larger context windows enable the AI to process longer, more complex inputs simultaneously

The context window is why AI sometimes "forgets" earlier parts of long conversations.