Frequently Asked Questions About RAG

How accurate is RAG compared to fine-tuning?

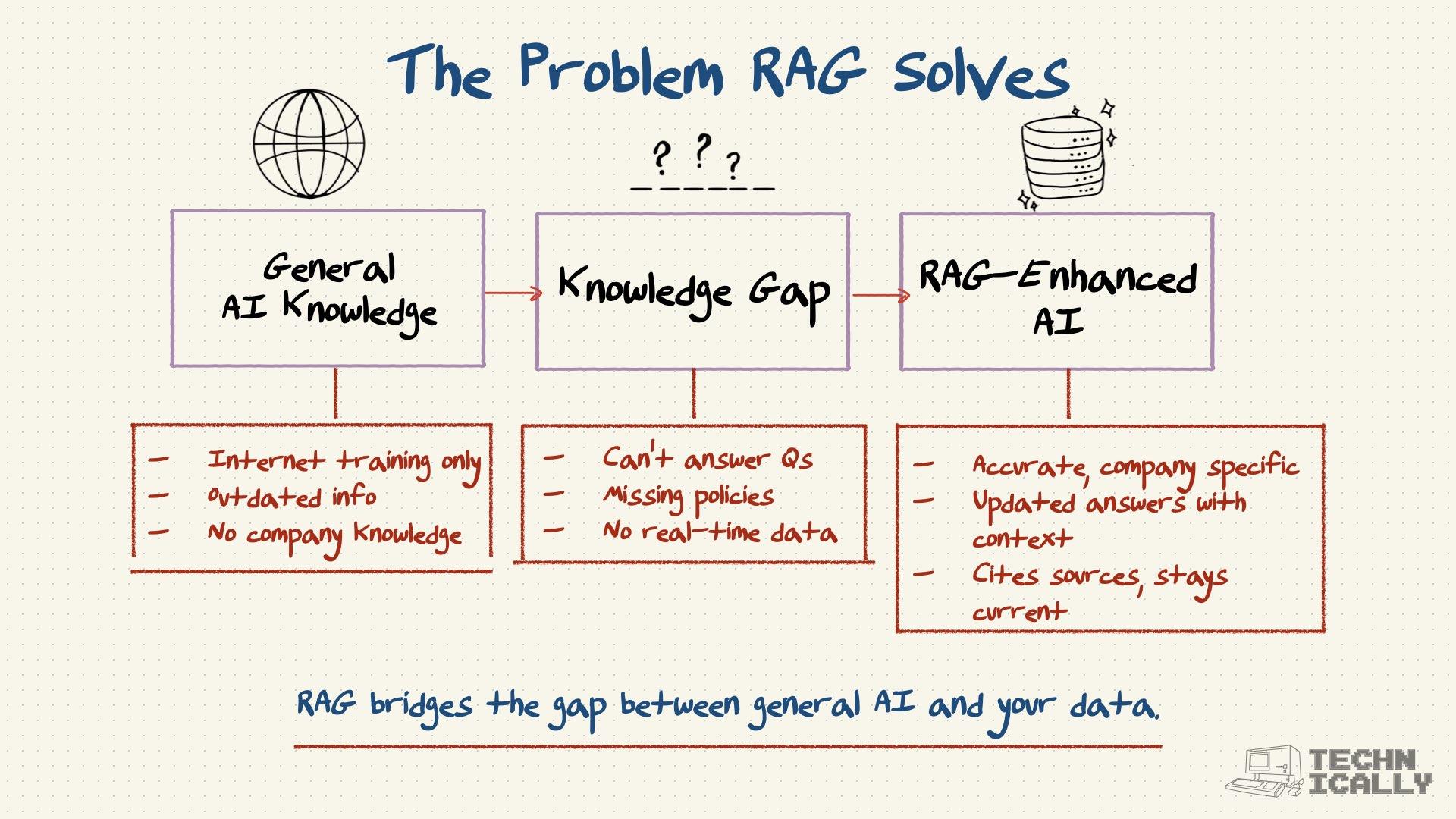

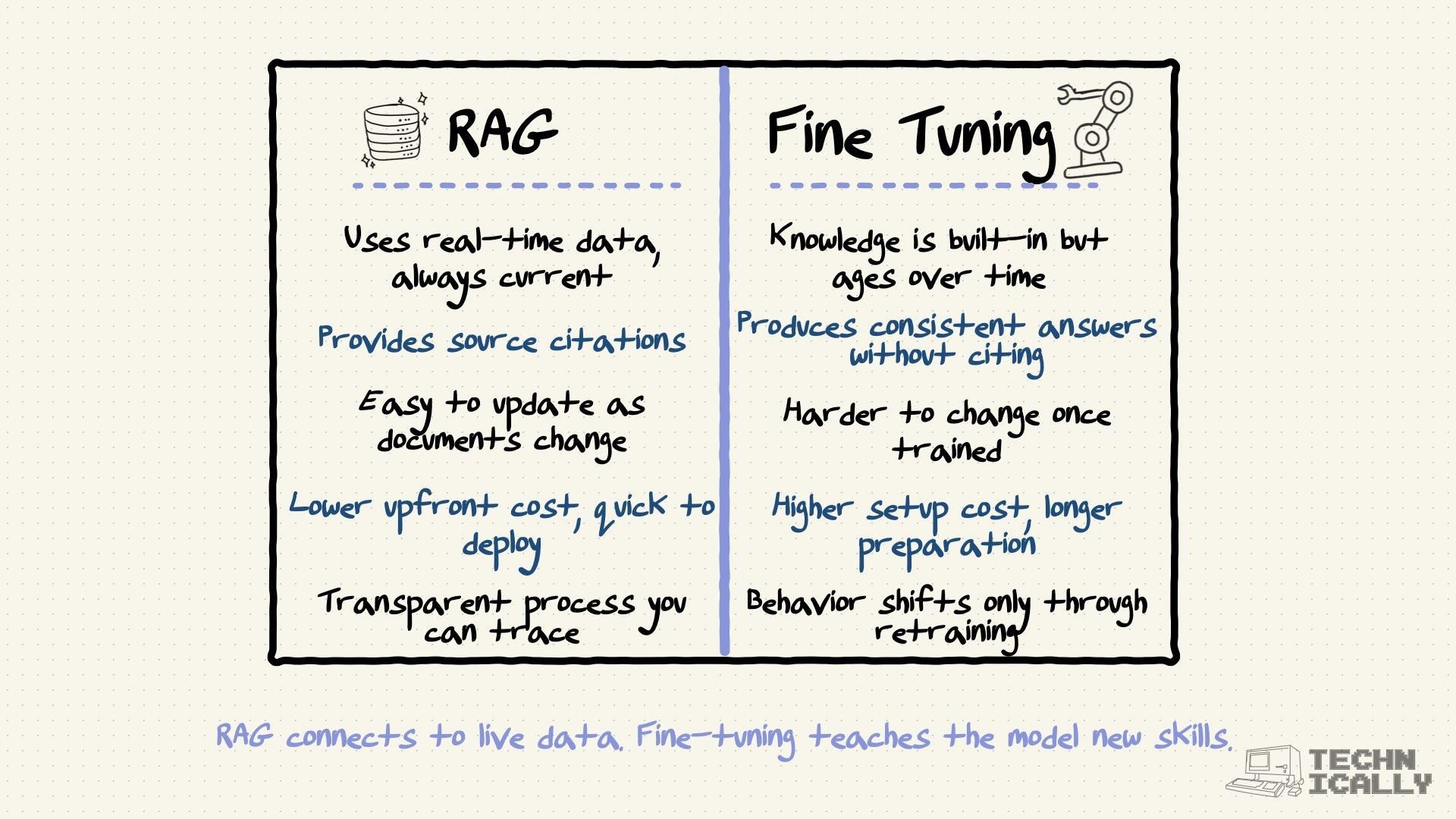

RAG can be super accurate for factual questions because it's literally looking up your actual documents rather than relying on old training data. The big advantage is you can trace exactly where each answer came from—no guessing about whether the AI is making stuff up. Fine-tuned models might be better for tasks that need consistent tone or specialized reasoning, but for "what does our policy say about X?" RAG usually wins.

What types of documents work best with RAG?

Pretty much any text-based content works—PDFs, Word docs, web pages, databases, wikis. The sweet spot is well-organized content with clear, factual information. Things that are heavy on visuals or rely on context from other documents can be trickier, but you can usually work around those limitations.

How much does it cost to implement RAG?

Wildly variable depending on your scale. Small setups might run a few hundred bucks per month for database hosting and AI API calls. Enterprise stuff can hit thousands. But it's almost always cheaper than training custom models, especially when you factor in the ongoing maintenance costs. Plus you're not locked into one AI provider—you can switch models without rebuilding everything.

Can RAG work with real-time data?

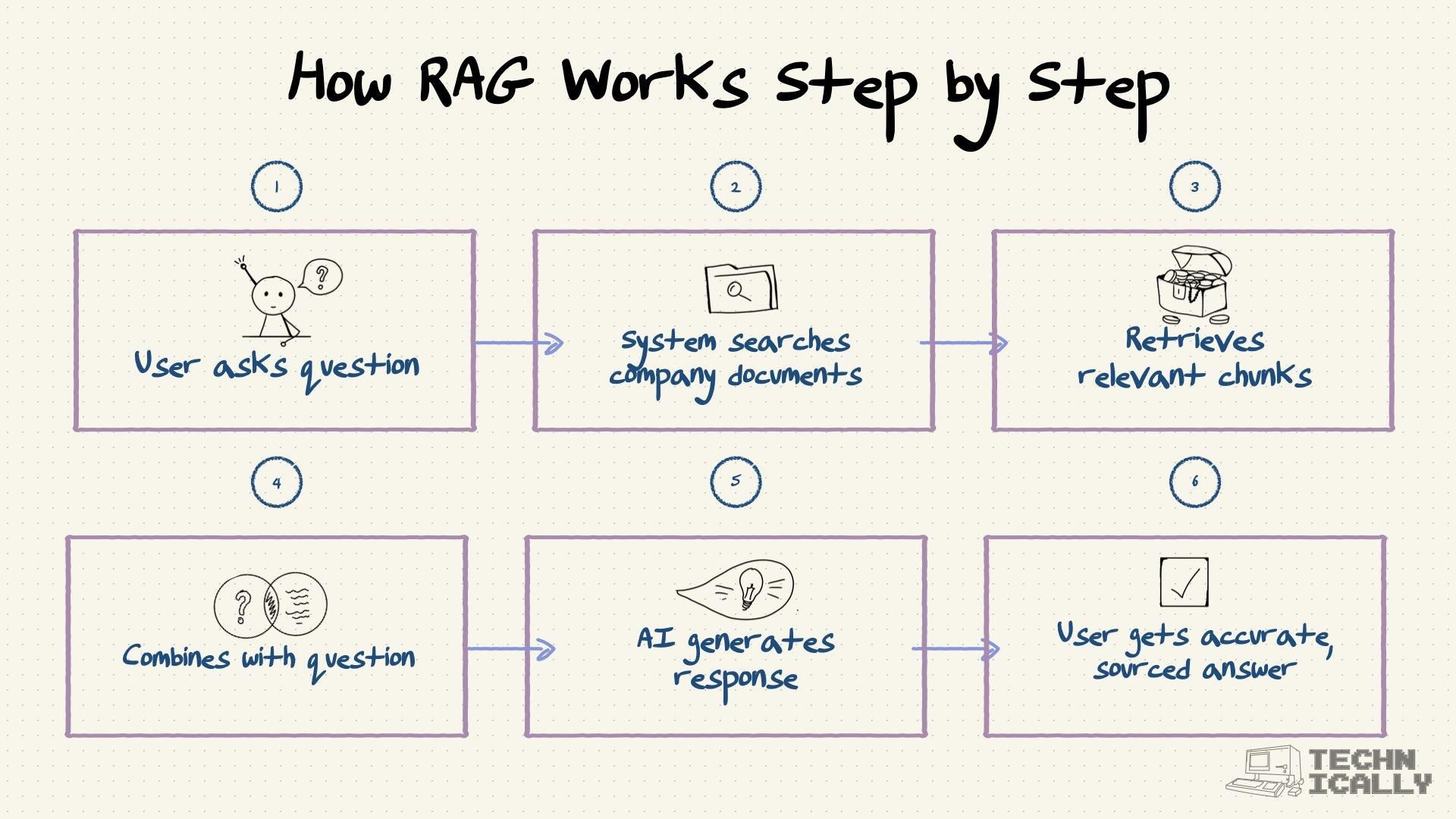

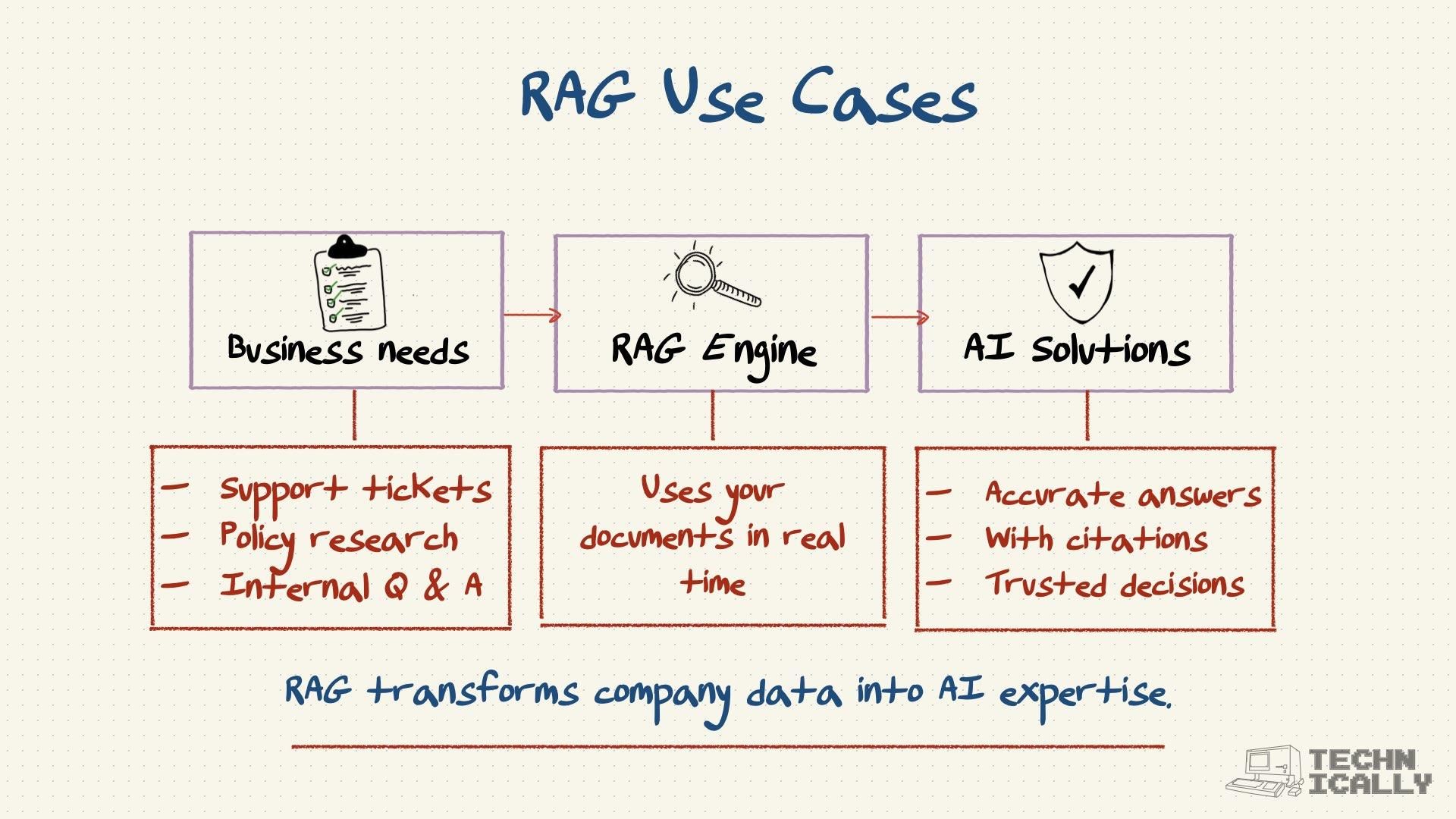

Absolutely, and that's one of its biggest advantages. As long as you keep your knowledge base updated, RAG gives you answers based on the latest info. This is huge for things like customer support where product details change frequently, or any business where "that policy changed last month" is a regular occurrence.

What are the main limitations of RAG?

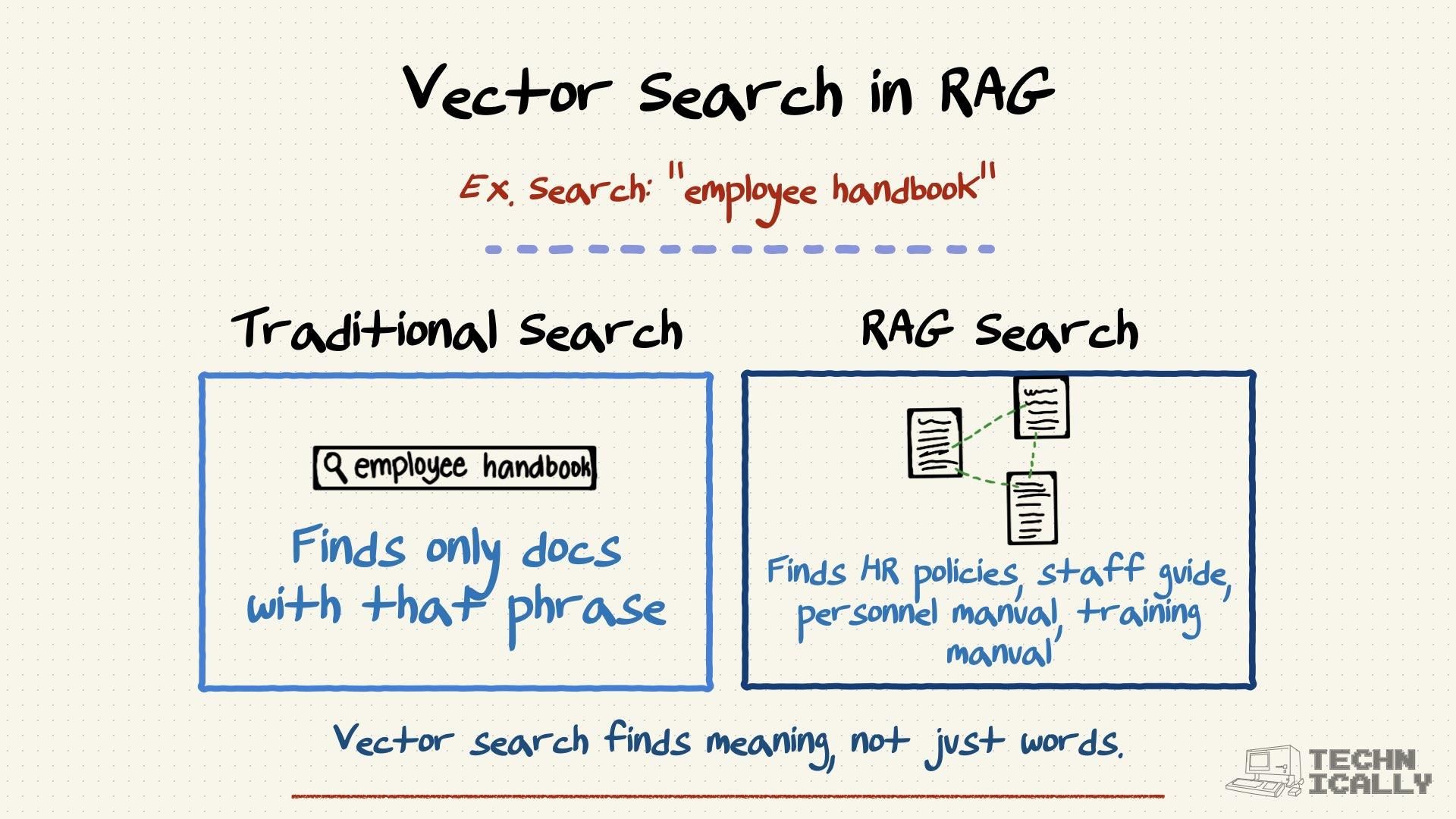

RAG struggles when you need it to connect dots across many different documents or when the answer isn't explicitly written anywhere. It's also only as good as your search system—if the retrieval part misses relevant info, the AI can't use it. And like any system that depends on external data, there's more complexity to manage compared to just throwing a question at ChatGPT.

How do you know if RAG is working well?

Watch for three things: is it finding the right documents when you ask questions, are the AI's answers actually relevant to what you asked, and are the answers factually correct according to your source material? Most teams also track whether users are satisfied with the responses. If people keep asking follow-up questions or seem confused, that's usually a sign something needs tuning.