Why do AI models need GPUs?

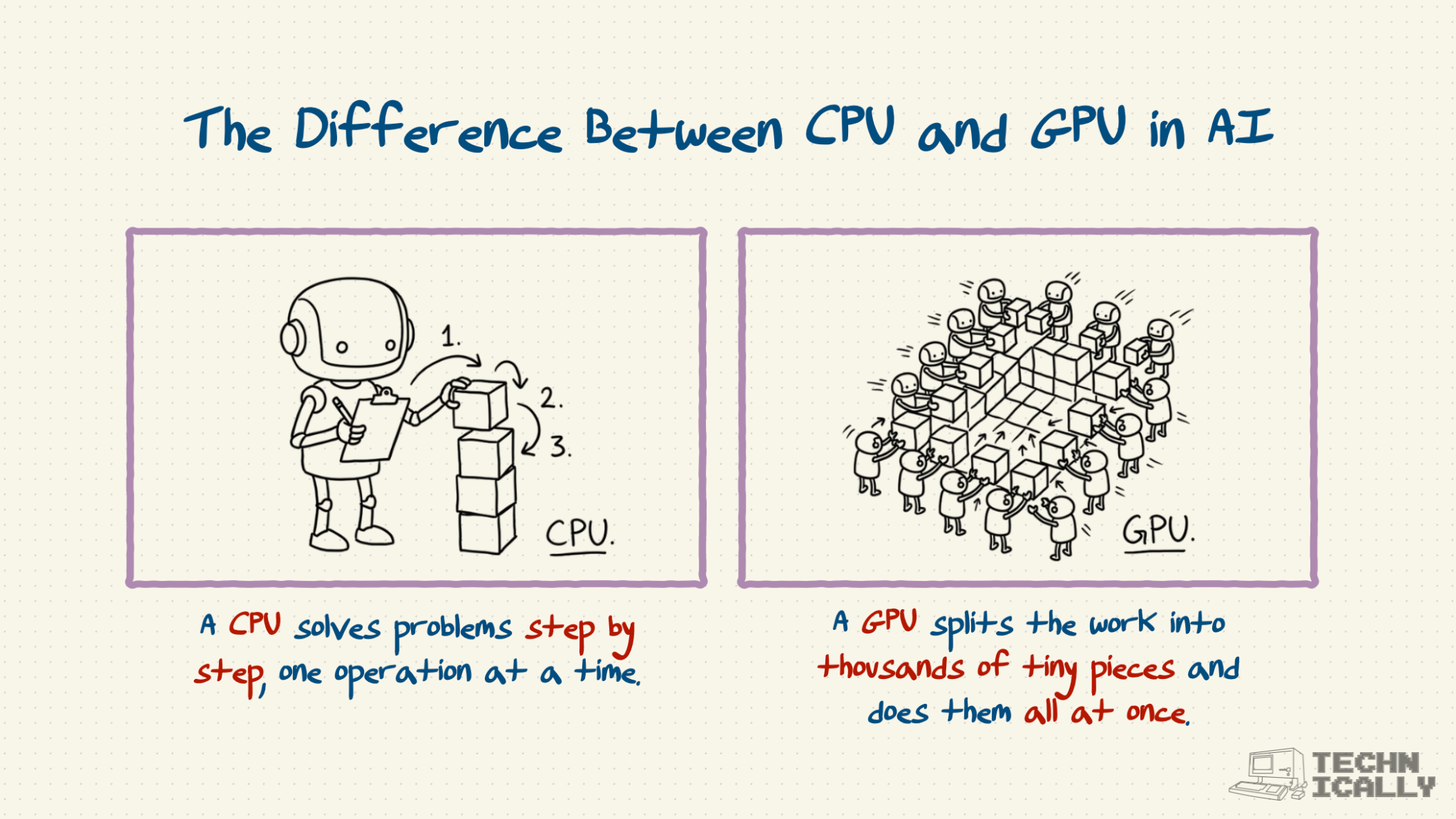

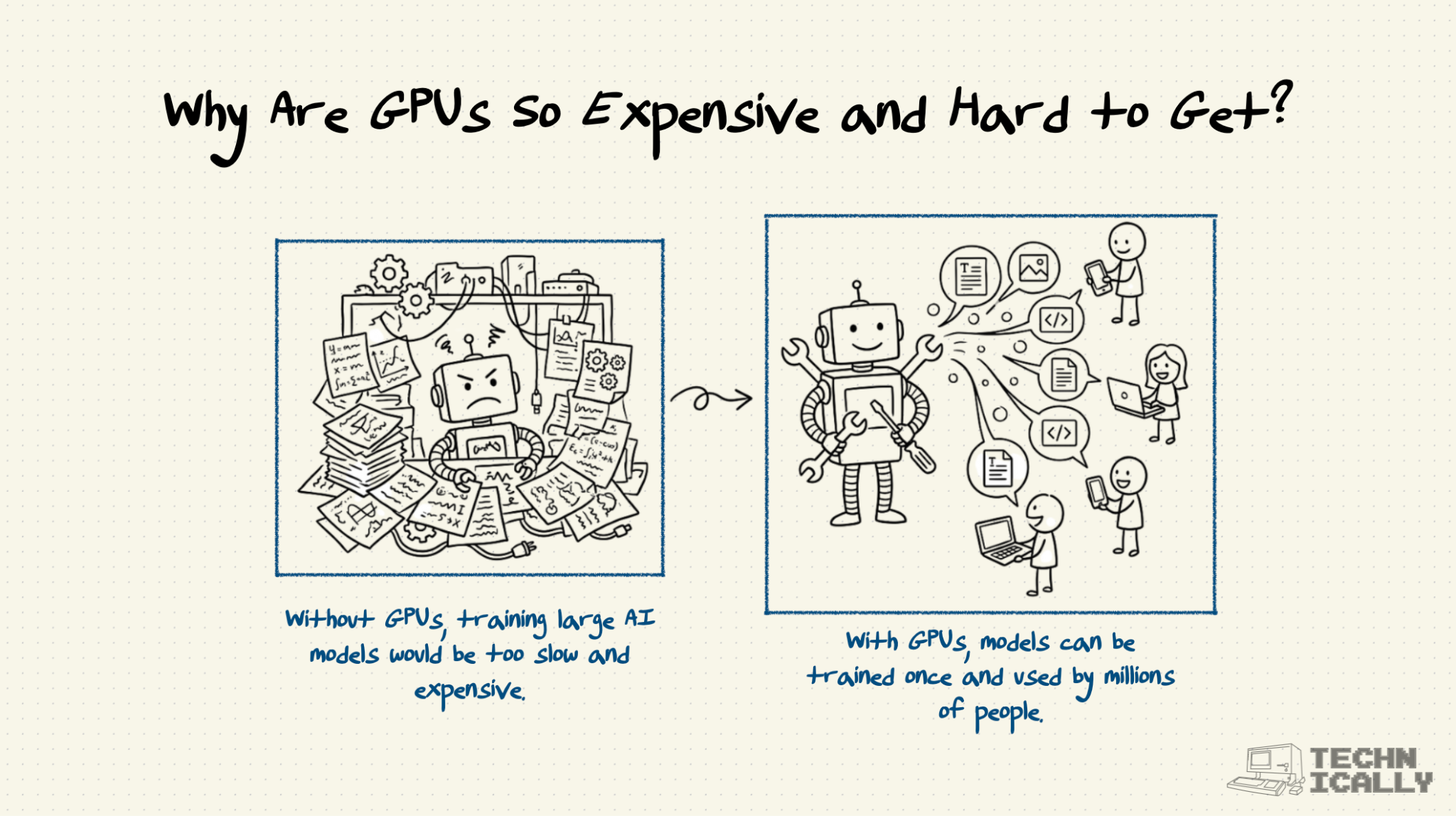

It turns out that another computing task that benefits from parallel processing is training and running AI models. For all of their complications, today's GenAI models are basically just a lot of simple math.

An AI model is basically a bunch of math. The mechanics of this "equation" are what's called a neural network - tons and tons of neurons, each firing to transmit signals and data. Today's neural nets have billions, if not trillions, of these little neurons.

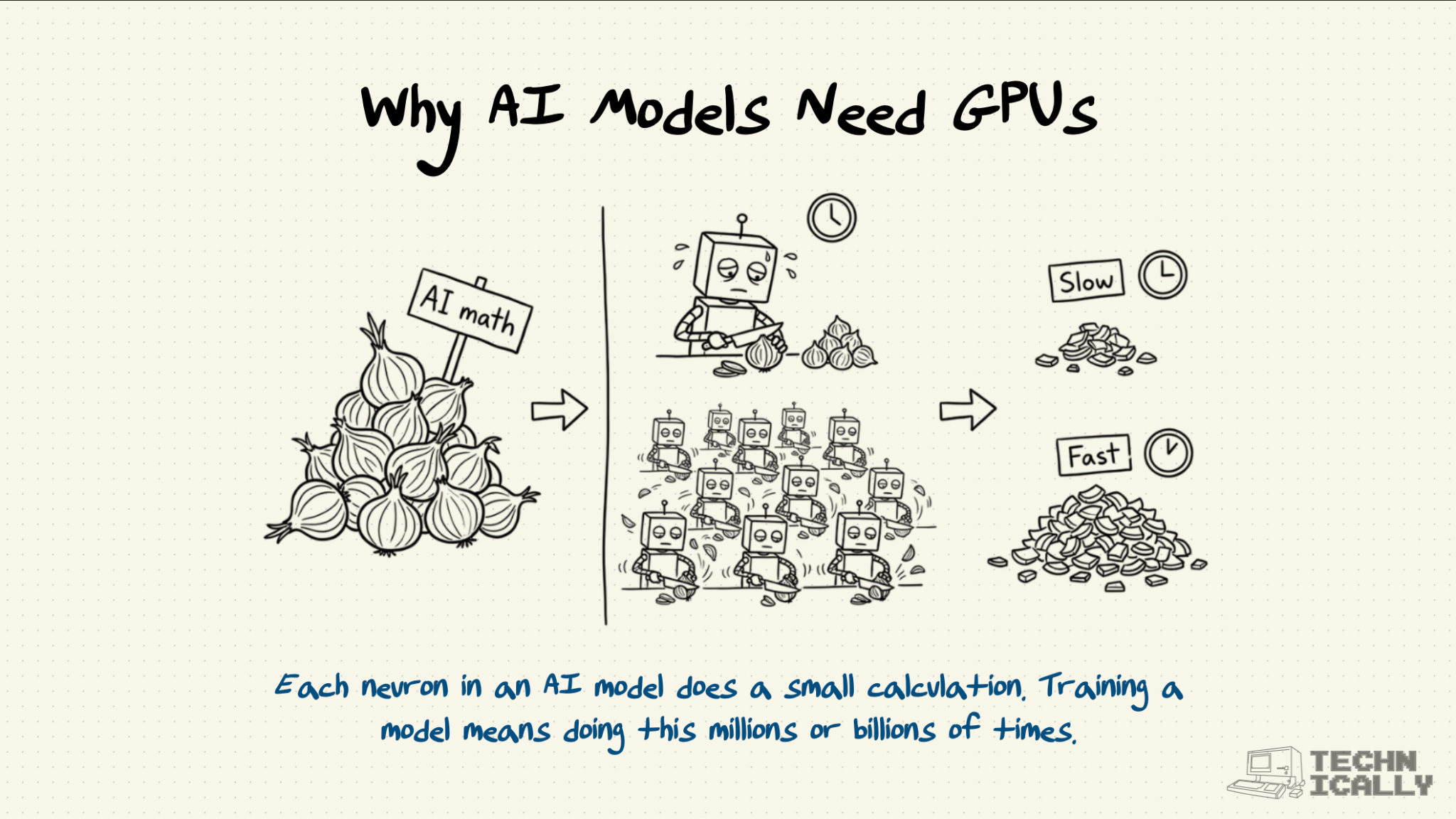

So what is a neuron, exactly? You can think of it as a tiny piece of the equation. Each one is very simple: all it does is take a number, perform some mathematical calculation, and then spit it out the answer.

The massive neural network equation is a perfect candidate for parallel computing. It's made up of billions of individual components that themselves are incredibly simple, like slicing an onion. If you can do more than one of these at a time, things would get a lot faster.