Fine tuning is the process of taking a pre-trained AI model and specializing it for your specific use case.

- Fine tuning is just like it sounds: taking a general-purpose tool and customizing it for your exact needs

- It’s much cheaper and faster than training a model from scratch

- Fine tuning is perfect for businesses that need AI to behave consistently in their domain

Fine tuning turns a jack-of-all-trades AI into an expert in your particular field or task.

What is fine tuning?

Fine tuning is the process of taking a pre-trained model – usually a large one – and adapting it to a specific task by further training it on a smaller dataset. Big, pre-trained foundation models are like giant hammers, and you might have a small nail. Fine-tuning helps adapt them to your specific data and situation.

Fine tuning solves a common problem: you have a powerful AI model like GPT-4 that's great at general tasks, but you need it to consistently perform well in your specific domain. Maybe you need it to write in your company's tone of voice, understand your industry jargon (e.g. healthcare), or follow your particular formatting requirements.

A general model might give you inconsistent results—sometimes perfect, sometimes way off base. Maybe the tasks you’re using it for are already present in the massive dataset the model was pre-trained on…but maybe they’re not. And even if they are, maybe the model isn’t using them well. Fine tuning teaches the model to consistently behave the way you want by showing it many examples of good performance in your specific context.

It's like the difference between hiring a talented generalist who needs to learn your company's way of doing things versus someone who already knows exactly how you operate.

What are the benefits of fine tuning?

Fine tuning offers several advantages over other approaches to AI customization:

Predictable Performance

Instead of hoping a general model will handle your use case well, you get consistent, reliable behavior tailored to your needs.

Faster Time to Value

You can have a specialized model running in weeks rather than the months or years required for training a brand new model.

Retained General Knowledge

Fine-tuned models keep their broad understanding while gaining specialized skills—you don't lose the foundation to gain the specialization.

Better Customer Experience

A well-fine-tuned model performs better in your domain than any off-the-shelf alternative. When your competitors are using generic ChatGPT and you're using a model trained on your specific processes and data, the quality difference is immediately visible to customers.

When do you need to fine tune a model?

Fine tuning makes sense when you need consistent, specialized behavior that general models can't reliably provide:

Domain-Specific Language

When your field uses terminology, formats, or conventions that general models don't handle well. Legal documents, medical records, and technical specifications often benefit from fine tuning.

Consistent Tone and Style

If you need AI to write in your company's specific voice—whether that's formal, casual, technical, or creative—fine tuning can ensure consistency across all outputs.

For example, customer support responses that perfectly match your brand voice, or marketing copy that sounds like it came from your team.

Specialized Tasks

When you need the model to follow specific procedures, formats, or decision-making processes that are unique to your organization.

Regulatory Requirements

In industries with strict compliance needs, fine-tuning can help ensure AI outputs meet specific regulatory standards. For example, healthcare providers might fine-tune models to handle patient data according to HIPAA requirements, financial firms might ensure outputs align with SEC disclosure rules, or legal teams might train models to recognize and flag privileged information.

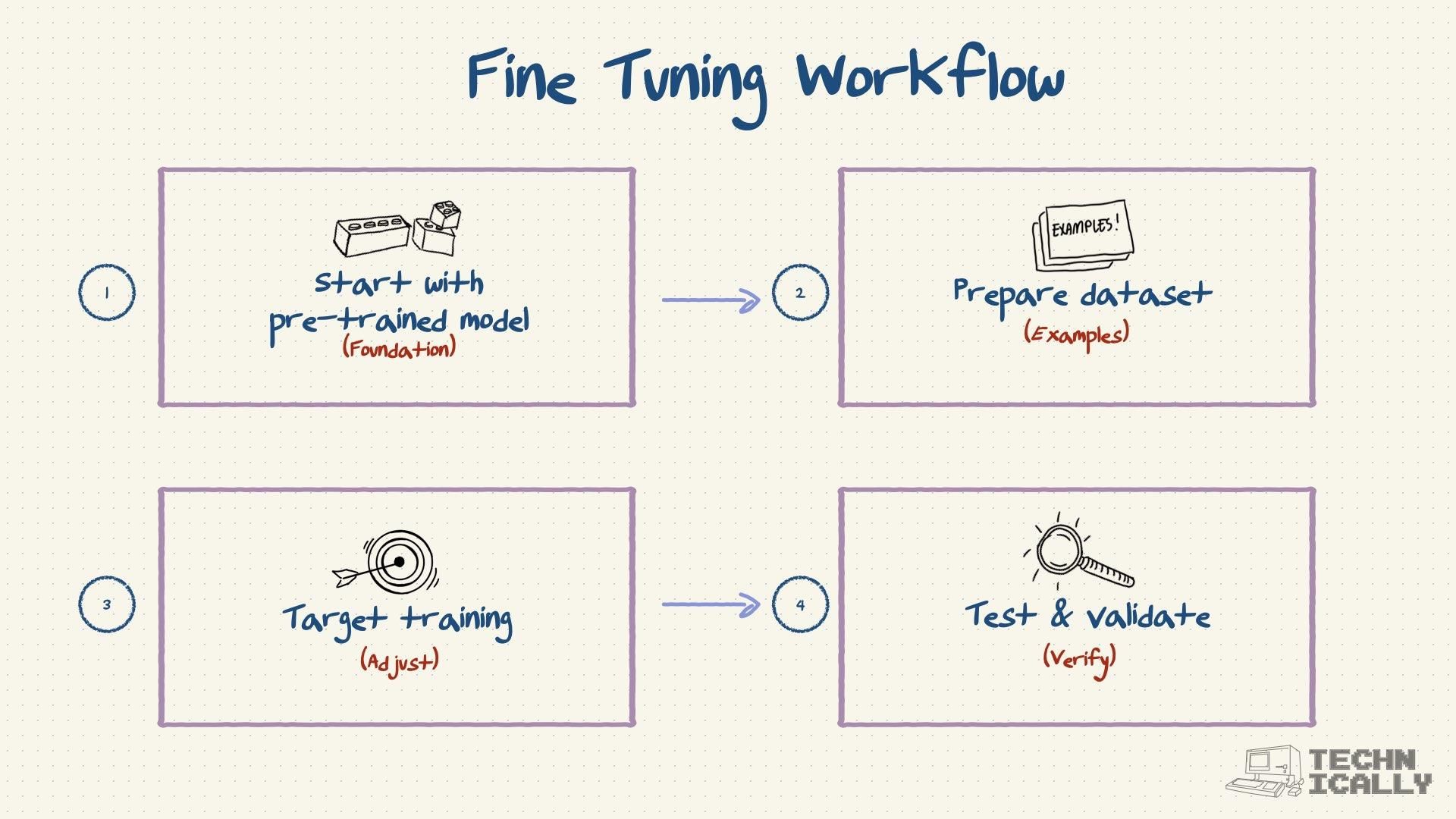

How does fine tuning work?

Fine-tuning builds on top of a model that's already been trained on massive amounts of general data. Instead of starting from scratch, you're essentially giving the model a specialized education.

You begin with a pre-trained model that already understands language, context, and general reasoning. Then you show it examples of how you want it to behave in your specific situation—maybe a few hundred examples instead of the millions needed for initial training.

This is similar to the instructional fine-tuning process used to create ChatGPT:

To turn the model from a knowledgeable blob into a helpful assistant, developers use something called instructional fine-tuning. What that does is further train the model to specifically act as the helpful, concise assistant that you know and love. And they pull it off by, well, training the model to do it via question / answer pairs.

What's the difference between fine tuning and training?

Training and fine tuning are related but serve very different purposes. Fine tuning is, in a sense, a type of training but is very different from the massive, initial pre-training that builds something like Claude:

Initial Training (Pre-training)

- Uses massive datasets (hundreds of terabytes encompassing "most of the internet")

- Takes months and costs millions of dollars (or more) in computational resources

- Teaches the model general language understanding, grammar, facts, and broad reasoning

- Creates a foundation that can handle almost any topic but lacks specialized expertise

Fine Tuning

- Uses smaller, specialized datasets (hundreds to tens of thousands of examples)

- Takes hours to days and can cost from under $100 to thousands of dollars depending on the approach

- Teaches the model to behave consistently in specific scenarios or match specific formats

- Builds on the existing foundation to create specialized expertise

It's like the difference between getting a university education (training) versus doing a specialized certification program (fine tuning). The university gives you broad knowledge and thinking skills, while the certification teaches you to excel in a specific field.

Should you fine-tune or just write better prompts?

Instead of fine tuning, can’t you just write better prompts with more context? Sort of. Both approaches can improve AI performance, but they work differently and serve different needs:

Prompt Engineering

- Works with existing models through better instructions

- Changes can be implemented immediately

- Great for experimentation and quick iterations

- No additional costs beyond API usage

- Limited by the model's existing capabilities

Fine Tuning

- Creates a specialized version of the model

- Requires time and resources to implement

- Better for consistent, repeatable performance

- Ongoing costs for model hosting and maintenance

- Can teach genuinely new behaviors and patterns

When to Choose Prompt Engineering:

- You need quick results

- Your requirements change frequently

- You're experimenting with different approaches

- The general model is "close enough" with good prompting

When to Choose Fine Tuning:

- You need consistent, specialized performance

- Prompt engineering isn't getting you the quality you need

- You have sufficient training data and resources

- The task is central to your business operations

Many successful implementations use both—prompt engineering for rapid prototyping and fine tuning for production systems that need reliable performance.

Other fine tuning FAQs

How much data do you need for fine tuning?

Way less than you'd think, but way more than you'd hope. Simple tasks might need a few hundred good examples, while complex stuff could require thousands. The real trick is quality over quantity—ten really well-crafted examples that clearly show what you want are worth more than a hundred mediocre ones that confuse the model.

Can you fine tune any AI model?

Most of the big players (OpenAI, Anthropic, Google) offer fine tuning as a service these days, which is convenient if you don't want to mess with the technical stuff yourself. Open-source models give you more control but require you to actually know what you're doing. Pick your poison based on how hands-on you want to get.

What happens if fine tuning goes wrong?

Your model can end up worse than when you started—like teaching someone bad habits that are hard to unlearn. Usually happens when your training examples are inconsistent or just plain wrong. The good news is most platforms let you roll back to previous versions, so you're not permanently stuck with a broken model.

Is fine tuning worth the hassle?

Depends on how much you value consistent performance. If you're fine with the occasional weird response from a general model, stick with prompt engineering. But if you need reliable, predictable behavior for business-critical stuff, fine tuning is often worth the investment. Just don't expect miracles—you're optimizing, not fundamentally changing what the model can do.