Embeddings are how AI models turn words, images, or other data into mathematical coordinates that computers can actually work with.

- Computers can’t quite read like us: they need a series of numbers, not letters or words.

- Embeddings are "GPS coordinates" for words: Every word gets assigned a specific numerical spot in a massive mathematical map.

- Closeness = Similarity: Words with similar meanings (like "cat" and "dog") end up parked right next to each other on this map.

- It’s not just for text: You can create embeddings for images, songs, and even your Netflix viewing habits.

What are embeddings in AI?

The funny thing about computers is that they are absolute superhuman wizards at math, and yet totally helpless when it comes to language.

To a computer, the word "squirrel" is just a string of meaningless characters. It doesn't know that squirrels are furry, that they have big tails, or that they are likely responsible for an underground power network that decides the fate of international geopolitics.

Embeddings solve this by translating human language (or anything, really) into the only thing computers actually understand: numbers. Once the computer understands language you can do all sorts of important things with it, like train an AI model.

Instead of feeding the model 150,000 raw words from a bunch of cocktail recipes and hoping a machine figures it out, embeddings distill that messy text into a clean list of numbers that represent the meaning of the content, not just the letters.

How do word embeddings work?

Imagine you are trying to organize every single word in the English language on a giant map on your wall.

Your goal is to pin words that mean similar things close together. You’d probably put "happy," "joyful," and "ecstatic" in one corner. "Sad," "miserable," and "depressed" would go in the opposite corner.

That is essentially what an embedding is. But instead of a flat 2D map on a wall, embeddings use hundreds or even thousands of dimensions 🤯.

😰 Don’t sweat the details 😰

It is impossible for a human brain to visualize a "thousand-dimensional space” so don’t try too hard. Just imagine a 3D cube, then imagine it has a headache. The point is: more dimensions allow the computer to capture more nuance (gender, plural vs. singular, verb tense, etc.) all at once.

In this mathematical space, "cat" and "dog" end up close together because they are both household pets. "Cat" and "Airplane" are miles apart. Hopefully.

The mathematical magic is that specific relationships stay consistent. The distance and direction from "King" to "Queen" is almost identical to the distance and direction from "Man" to "Woman." The computer doesn't know what royalty is, but it knows the math matches.

Why are embeddings important for language models?

Without embeddings, AI models like ChatGPT wouldn’t work at all. They’d have no understanding of what words actually mean, and ergo, how to put them together into cohesive sentences and paragraphs.

Armed with this embedding coordinate system, neural networks can learn semantic relationships. They stop looking at words as isolated islands and start seeing the bridges between them.

This is exactly why modern AI can look at these two sentences and know the difference:

- "The bank is closed on Sundays."

- "I sat by the river bank."

With embeddings, the model looks at the neighboring coordinates (words like "river" vs. "money") and understands that while the word is the same, the meaning (and its mathematical coordinate) is completely different.

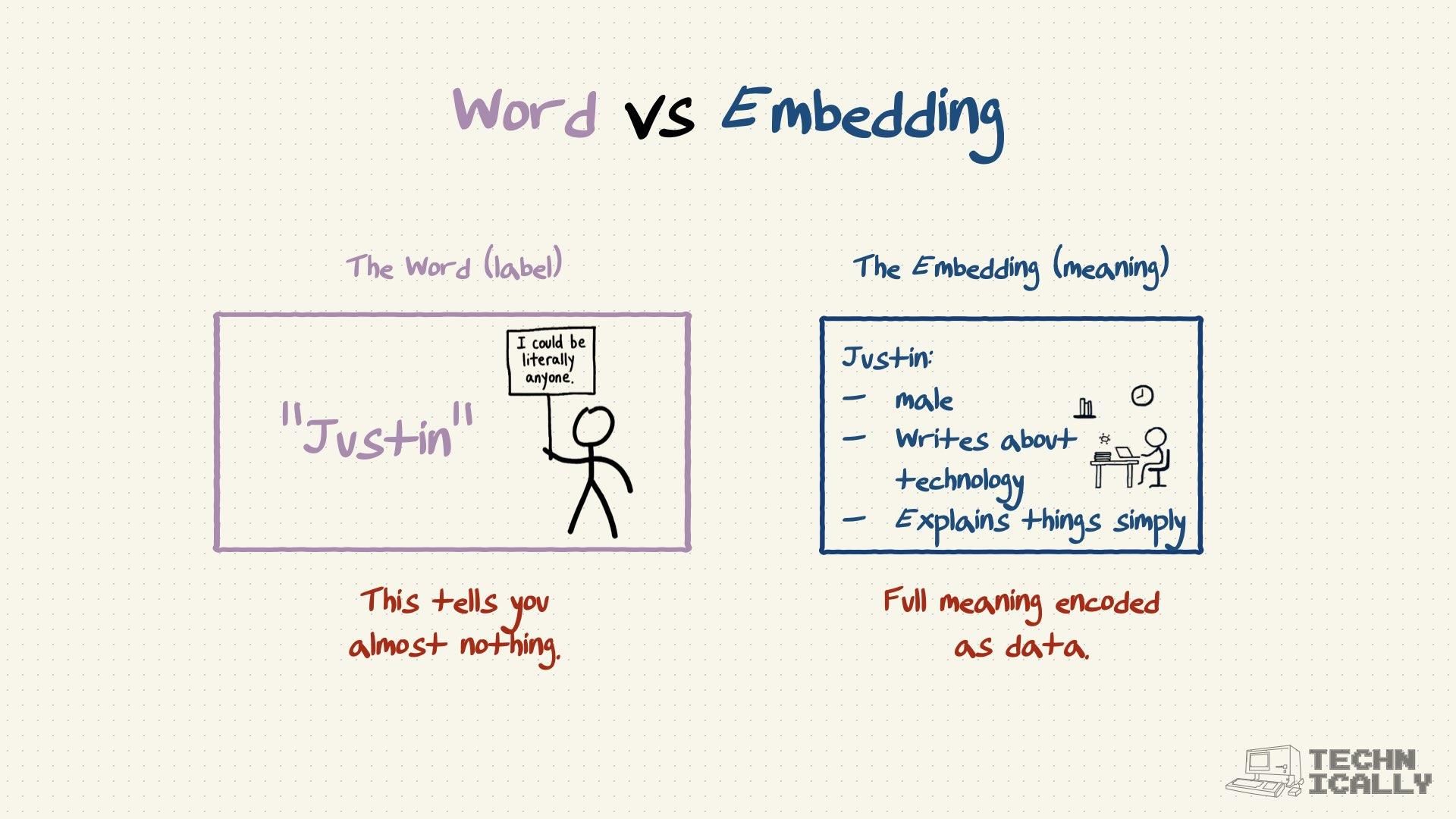

What's the difference between words and their embeddings?

Think of the difference between a word and its embedding like the difference between a person's name and their full psychological profile.

- The Word: "Justin." (This is just a label. It tells you nothing about who he is.)

- The Embedding: Male, writes about technology, explains complex topics simply.

The word is just the pointer. The embedding is the rich, data-heavy description of what that pointer actually represents in the real world.

How do neural networks use embeddings?

Embeddings are effectively the "Universal Translator" for your computer. When you type a prompt into ChatGPT, here is the pipeline:

- Tokenization: Your words get chopped up into chunks.

- Embedding: Those chunks are converted into lists of numbers (coordinates).

- Processing: The neural network does a bunch of math on those numbers and plays [the word guessing game].

- Decoding: The resulting numbers are turned back into words you can read.

This translation layer is why AI can do things that seem magic, like understanding that "car" and "automobile" are synonyms, or that "Paris" is to "France" what "Tokyo" is to "Japan." It’s not logic; it’s just geometry.

Are embeddings only for words?

No, and this is where things get really cool. You can create embeddings for literally anything that can be turned into data.

- Images: An image of a golden retriever gets converted into numbers that represent "dog," "fur," "floppy ears," and "good boy."

- Music: Songs become mathematical representations of rhythm, melody, and genre.

- Netflix: This is part of how your "Recommended for You" section works. Netflix creates an embedding for you based on your watch history. It then looks for movies with embeddings that are mathematically close to your user embedding.

If you liked The Office, the algorithm doesn't know it's a sitcom. It just knows that The Office and Parks and Rec are neighbors in the mathematical map.

How are embeddings created?

You might think some poor linguist had to sit down and manually assign coordinates to every word in the dictionary. Thankfully, no.

The process is surprisingly elegant: the model teaches itself.

Embeddings are usually a byproduct of training a model to do something else. For example, with a system like Word2Vec, you feed the computer billions of sentences and give it a simple task: "Predict the missing word in this sentence."

- Input: "The cat sat on the ____."

- Computer guesses: "Sandwich."

- We say: "Wrong. It's 'mat'."

- Computer adjusts its numbers.

After doing this billions of times, the computer realizes that "mat," "rug," and "floor" often appear in similar spots, so it shifts their coordinates closer together to minimize its errors. The embeddings emerge naturally from the chaos.

In practice there are tons of models you can use off the shelf for embeddings, e.g. here are a few from OpenAI.

Frequently Asked Questions About Embeddings

Can you visualize embeddings?

Sort of, but it’s like trying to draw a map of the entire universe on a post-it note. Researchers use techniques (like t-SNE) to squash these 1,000-dimensional concepts down into 2D or 3D charts. You can see cool clusters — like all the fruits hanging out together or all the chefs in one corner — but you lose a ton of nuance in the process.

How big are embeddings?

It depends on how smart you want your model to be. Older models might use 100-300 dimensions (numbers) per word. The massive brains behind GPT-5 are likely using tens of thousands of dimensions. More dimensions = more understanding, but it also requires a hell of a lot more computing power to process.

Who invented embeddings?

The concept has been around in academia for decades, but Word2Vec (released by Google in 2013) was the "iPhone moment" for embeddings. It proved you could capture surprisingly deep relationships between words using relatively simple math.

Do embeddings work in all languages?

Yes! And this is one of the best features. You can create embeddings for Spanish, Japanese, or Swahili. Even cooler? In multilingual models, the concept of "Cat" in English and "Gato" in Spanish end up in the same neighborhood. The math creates a universal bridge between languages.

What happens when you add or subtract embeddings?

This is the party trick of the AI world. You can literally do math with concepts.

- King - Man + Woman ≈ Queen

- Paris - France + Italy ≈ Rome

It doesn't work perfectly every time, but the fact that you can do arithmetic with meaning is pretty mind-blowing.