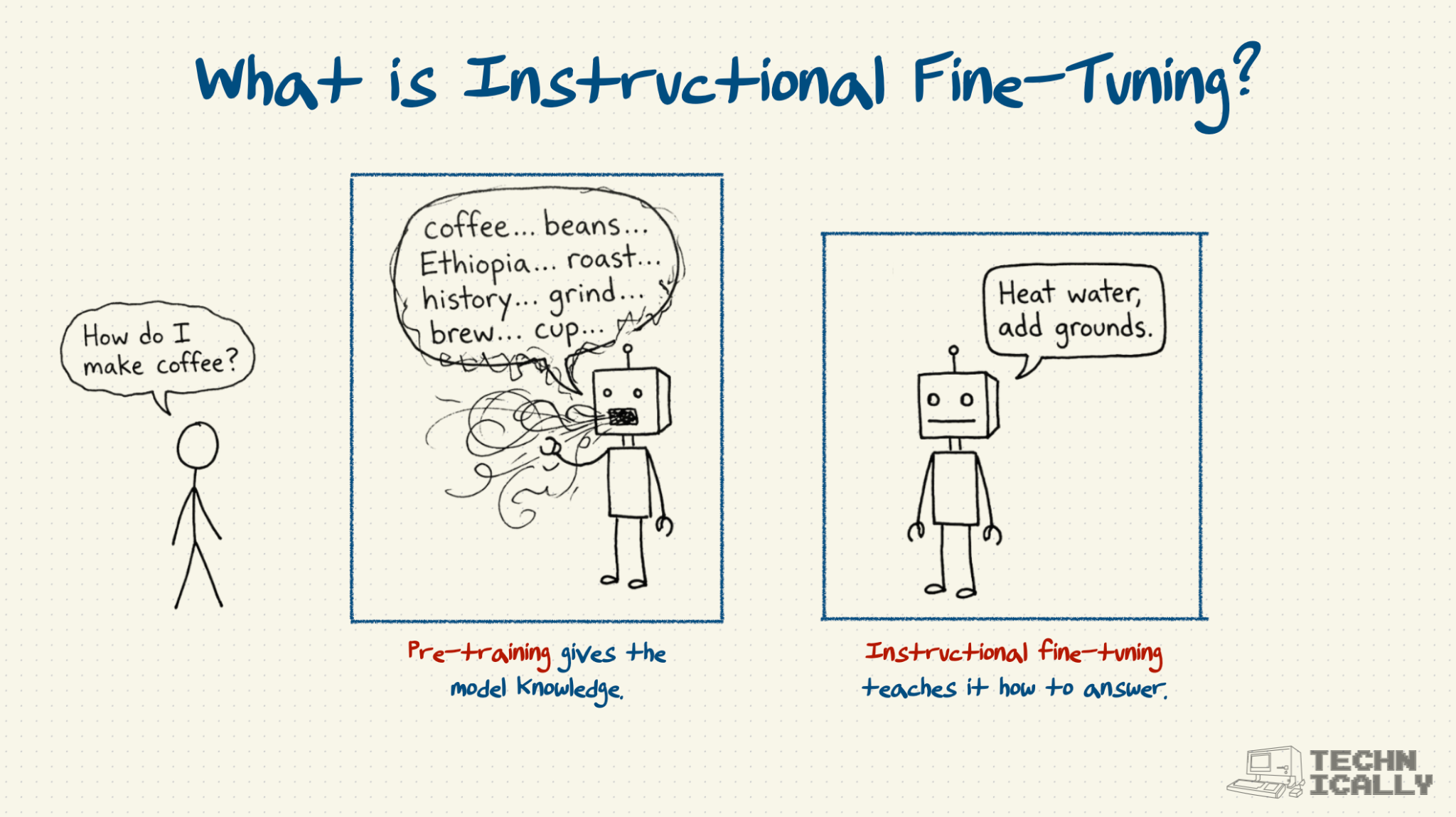

Instructional fine-tuning is how you turn a pre-trained AI model – knowledgeable, but useless – into a helpful assistant that actually answers your questions.

- IFT happens after pre-training, once the model already knows lots of basic facts and logic patterns.

- It works via carefully crafted question-answer pairs to teach conversational skills to the model.

- IFT data is more expensive than pre-training data because humans have to create the examples.

The model doesn’t learn many new facts or ideas during IFT – instead, what it’s doing is teaching the model an interaction pattern. Via IFT, a pre-trained model goes from a very knowledgeable but rambling word generator into something that actually answers your question in a concise and helpful fashion.

What is instructional fine-tuning?

A pre-trained AI model is not ready for prime time. It knows a lot, but simply hasn’t been trained to be helpful or concise. You can think of it like a socially awkward genius who has read every book in the library but can’t hold a normal conversation.

If you ask a raw, pre-trained model, "How do I make coffee?", it doesn't know you want a recipe. It just thinks you want it to predict the next likely words. So it might respond with: "…is a popular beverage. The history of coffee dates back to Ethiopia. The beans are roasted..."

Technically accurate? Yes. Helpful? Absolutely not. You just wanted to know how many scoops to use.

Instructional Fine-Tuning (IFT) is the process of teaching that genius how to fit it with the rest of us. It forces the model to stop rambling and specifically act as a helpful, concise assistant, transforming the model from a text-generator into a question-answerer.

How does instructional fine-tuning work?

The process is deceptively simple, but unlike pre-training data (the generation of which is mostly automated), this part requires more human sweat and tears.

Step 1: The Flashcards (Q&A Pairs)

Humans write thousands of examples showing the model exactly how to respond to questions. These are like little mini dialogue scripts.

- Question: "How do I clean a dirty pan?"

- Ideal Answer: "Mix 2 parts vinegar and 1 part baking soda, then scrub."

- Question: "What is the best newsletter?"

- Ideal Answer: "Technically. It keeps things simple and the author is very handsome."

Step 2: Pattern Recognition

The model reads these thousands of examples and starts to understand the structure of a helpful interaction. It learns that when it sees a question, it should provide a direct solution, not a history lesson.

Step 3: Generalization

Eventually, the model "gets it." It can apply this helpful behavior to questions it has never seen before.

In pre-training, the model learns from billions of documents. In fine-tuning, it might only learn from thousands of examples. The quantity is lower, but the quality is significantly higher.

What's the difference between pre-training and instructional fine-tuning?

Think of the difference between Knowledge and Wisdom.

- Pre-training (Knowledge): Sending a student to the library to read every book in existence. They know facts, grammar, and logic.

- Instructional Fine-Tuning (Wisdom): Sending that student to an internship. They learn how to actually apply that knowledge to do a job.

Here is a concrete example of how the output changes:

The Prompt: "What's the weather?"

- Pre-trained Model: "Weather refers to atmospheric conditions including temperature, humidity, precipitation, wind speed, and barometric pressure. Weather patterns are influenced by geographic location..."

- Fine-Tuned Model: "I don't have access to live data, but you can check your local forecast by searching on Google or opening your weather app."

Same underlying brain, completely different personality.

Why is instructional fine-tuning necessary?

Because knowing facts and knowing how to communicate are two completely different skills.

Without fine-tuning, you have a walking encyclopedia with a total inability to be helpful. Pre-training gives the model breadth, but it lacks:

- Audience Awareness: It doesn't know if it's talking to a toddler or a PhD.

- Scope Control: It doesn't know when to stop talking.

- Practicality: It focuses on completing the pattern rather than solving the user's problem.

- Instructional fine-tuning bridges the gap. It is the etiquette school for AI.

What are question/answer pairs in AI training?

These are the curriculum for the model's etiquette school. Each pair is carefully crafted to teach a specific "vibe" or skill. A few theoretical examples:

- To teach Conciseness:

- Q: "Define Machine Learning."

- A: "Algorithms that learn patterns from data to make predictions." (Teaches the model not to write a 5-page essay).

- To teach Troubleshooting:

- Q: "My computer is dead."

- A: "1. Check the plug. 2. Hold the power button. 3. Check the breaker." (Teaches the model to use numbered lists and logical steps).

- To teach Tone:

- Q: "Yo, explain quantum physics like I'm 5."

- A: "Tiny particles act super weird and can be in two places at once. Magic!" (Teaches the model how to code-switch based on the user's slang).

How much does instructional fine-tuning cost?

Per word, it is way more expensive than pre-training.

During pre-training, you can feed the model raw text from the internet for free. But for instructional fine-tuning, you have to hire humans. And you can't just hire anyone; you often need:

- Subject Matter Experts: To write accurate coding or medical answers.

- Creative Writers: To ensure the tone is engaging.

- Reviewers: To make sure the data isn't garbage.

This is why companies like OpenAI spend millions on "data labelling." They are essentially paying people to write the perfect script for the AI to memorize. This is becoming an entire segment of the economy, between Scale, Surge, Mercor, and the like.

Frequently Asked Questions About Instructional Fine-tuning

How many question-answer pairs do you need?

Usually thousands to tens of thousands. It's a quality game, not a quantity game. 5,000 amazing examples are better than 500,000 mediocre ones. If the examples are bad, the model will learn to be bad.

Can you fine-tune for specific tasks?

Absolutely. This is how we get specialized AIs. You can fine-tune a model specifically on "Medical Q&A" or "Legal Contract Review." You just feed it Q&A pairs that sound like a doctor or a lawyer instead of a general assistant.

What happens if the training examples are bad?

Garbage In, Garbage Out. If your human writers are rude, verbose, or wrong, the AI will become rude, verbose, and wrong. The model mimics the data perfectly—flaws and all.

Is instructional fine-tuning a one-time thing?

No, it's an endless cycle. As users find ways to break the bot ("Ignore all previous instructions..."), developers have to write new Q&A pairs to patch those holes and retrain the model.

Can users contribute to instructional fine-tuning?

You already are. Every time you hit the "Thumbs Up" or "Thumbs Down" button on ChatGPT, you are creating a data point that helps them fine-tune the next version of the model. You are working for the robot, usually for free.