Inference is a fancy term that just means using an ML model that has already been trained.

- It's the "productive work" phase when AI models actually help solve real problems

- Inference happens after training is complete—no more learning, just applying what was learned

- Like taking a test versus studying for it—you use knowledge without acquiring new knowledge

- Doing inference well and making sure models run fast and efficient is no simple feat

If you’ve ever used Claude or ChatGPT, congrats, you’ve done inference.

What is AI inference?

Inference is a fancy term that just means using an ML model that has already been trained.

Think of it this way: if training is like going to school, then inference is like using your education to do your job. The learning phase is over, and now you're applying what you know to solve real problems.

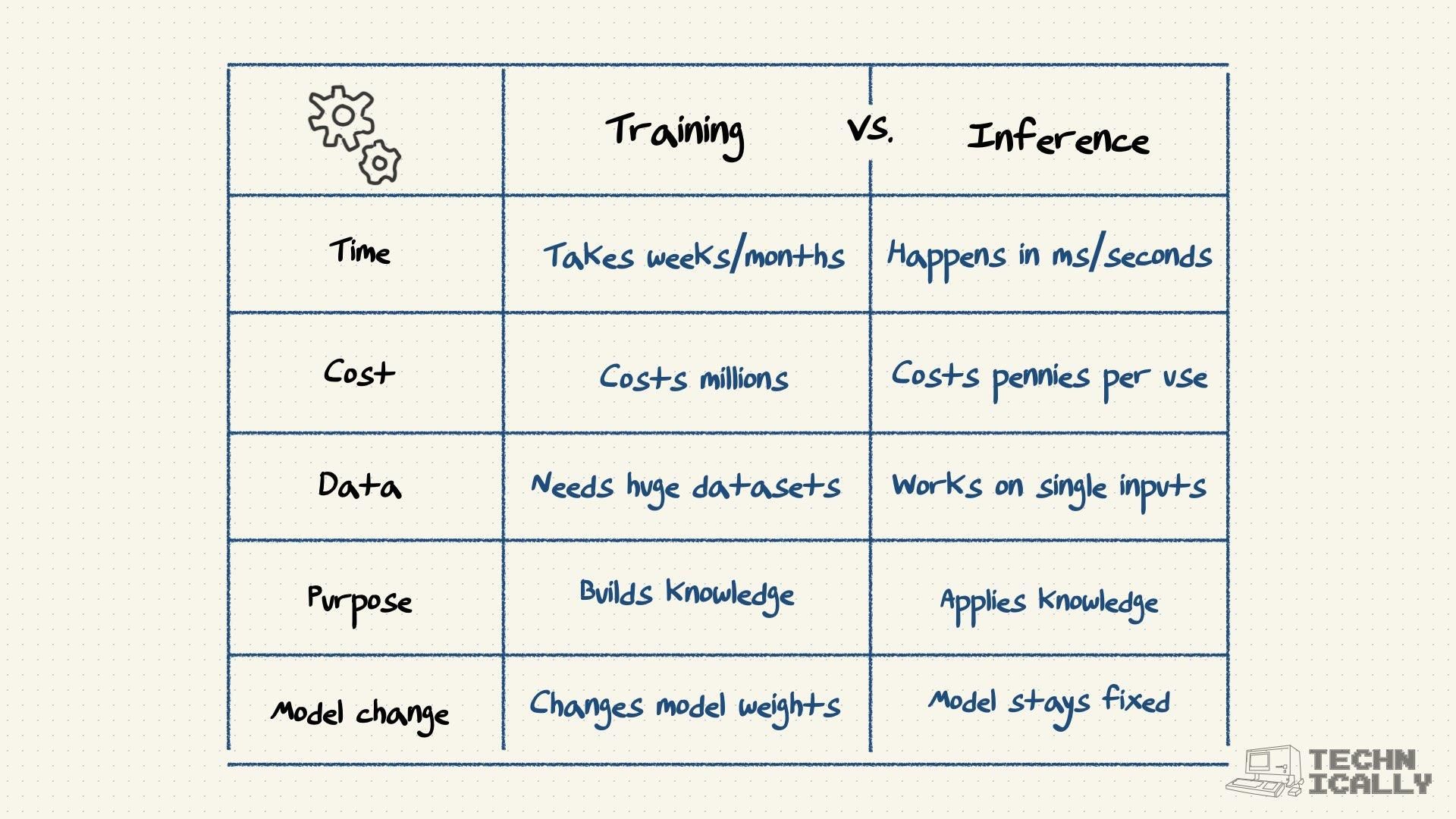

During training, an AI model learns patterns from massive datasets—this is expensive, time-consuming, and requires enormous computational resources. During inference, the model uses those learned patterns to make predictions, generate text, recognize images, or whatever task it was trained for. This happens much faster and with far fewer resources (although some compute is required).

Every time you chat with ChatGPT, ask Siri a question, or get a recommendation from Netflix, you're experiencing AI inference in action. The heavy lifting of learning has already been done; now the model is just applying its knowledge.

How does AI inference work?

AI inference follows a straightforward process that may seem complex, but if all goes well happens incredibly quickly:

Input Processing

Your question, image, or data gets converted into the mathematical format the model understands (usually involving [[tokenization:tokenization]] for text or feature extraction for other data types).

Pattern Matching

The model applies all the patterns it learned during training to your specific input, calculating probabilities and relationships.

Computation

The model processes your input through its learned parameters—this is where the "magic" happens, but it's actually just very fast mathematical operations.

Output Generation

The model converts its mathematical conclusions back into human-readable form—text, classifications, recommendations, or whatever format you need.

Post-Processing

The raw output often gets cleaned up, formatted, or filtered before you see the final result.

This entire process typically happens in seconds or less, even though the model might have billions of parameters working together.

What does it cost to run inference?

Unlike training costs (which are massive one-time expenses), inference costs are ongoing operational expenses that scale with usage:

Per-Request Pricing

Most AI services charge based on tokens processed, [[API calls:api-calls]] made, or compute time used. This makes costs predictable but requires careful optimization.

Infrastructure Costs

If you're running your own models, you pay for servers, GPUs, memory, and bandwidth. Cloud providers offer various pricing models from pay-per-use to reserved capacity.

Hidden Costs

- Data preprocessing and postprocessing

- Model loading and initialization

- Error handling and retry logic

- Monitoring and logging systems

Cost Optimization Strategies

- Use smaller models when possible

- Optimize prompts to reduce token usage

Edge inference vs cloud inference

If you’re using something like ChatGPT or Claude, you’re doing inference in the cloud. OpenAI and Anthropic use massive cloud servers to run these models, into which you tap when you use them. But there’s another way to deploy these models for inference: on the actual device that you’re using them on. This is called the “edge” and which of these you choose has major implications for cost, speed, and privacy:

Cloud Inference

- Pros: Access to powerful hardware, automatic scaling, no infrastructure management

- Cons: Network latency, ongoing API costs, data privacy concerns

- Best for: Applications that need the most capable models and can tolerate slight latency

Edge Inference

- Pros: No network dependency, better privacy, lower ongoing costs, faster response times

- Cons: Limited by local hardware, higher upfront costs, model management complexity

- Best for: Real-time applications, privacy-sensitive use cases, offline requirements

Hybrid Approaches

Many successful applications use both—edge inference for simple, fast decisions and cloud inference for complex analysis that requires more powerful models.

Inference optimization techniques

Making inference faster and cheaper is an entire field of engineering:

Model Optimization

- Quantization: Using lower precision numbers to reduce memory and increase speed

- Pruning: Removing unnecessary parts of the model without significantly hurting performance

- Distillation: Training smaller models to mimic larger ones

Hardware Optimization

- GPU Acceleration: Using graphics cards optimized for parallel processing

- Specialized Chips: TPUs, FPGAs, and custom AI chips designed specifically for inference

- Memory Optimization: Ensuring models fit efficiently in available memory

Software Optimization

- Batching: Processing multiple requests simultaneously

- Caching: Storing common responses to avoid recomputation

- Load Balancing: Distributing requests across multiple servers

Other FAQs about inference

Can you improve a model's performance without retraining?

There are several tricks you can use at inference time: better prompting techniques, combining multiple models (like getting a second opinion), using RAG to pull in fresh information, and adding filters to clean up outputs. But if you want fundamental improvements to what the model can actually do, you're usually looking at retraining or fine-tuning territory.

What's the difference between inference and prediction?

People use these terms pretty interchangeably, but "prediction" usually means forecasting future stuff, while "inference" covers the whole range of AI tasks—classification, text generation, analysis, whatever. All predictions are inferences, but not all inferences are predictions.

How do you measure inference performance?

Four main things to watch: how fast the model responds (latency), how many requests it can handle at once (throughput), how good the outputs are (quality), and how much each request costs. Which one matters most depends on what you're building—real-time apps care about speed, batch processing jobs care about throughput and cost.

Can inference work without internet?

Absolutely. Edge inference runs locally on your device with no internet required. Common in mobile apps and privacy-sensitive stuff where you don't want data leaving the device. The trade-off is you're usually stuck with smaller, less capable models because of hardware limitations—your phone can't run the same models that live on massive server farms.

What happens when inference fails?

Depends on how you've set things up. Good systems have fallback plans: try a backup model, escalate to humans, or gracefully degrade (maybe switch from AI-generated responses to canned ones). For critical applications, you definitely want error handling built in from day one—nobody wants their customer service bot to just crash when things go sideways.

How secure is inference?

Security has two sides: protecting the model itself (from theft or tampering) and protecting your data (from unauthorized access). This usually means encrypted communications, secure hosting, input validation, and audit logs. For really sensitive stuff, there are even techniques that let you run inference on encrypted data, though that gets pretty complex pretty fast.